Large Language Models (LLMs) have moved rapidly from experimentation to real business use. Many organizations now have working demos, internal tools, or early customer-facing features powered by LLMs. Yet very few of these systems are truly production-ready.

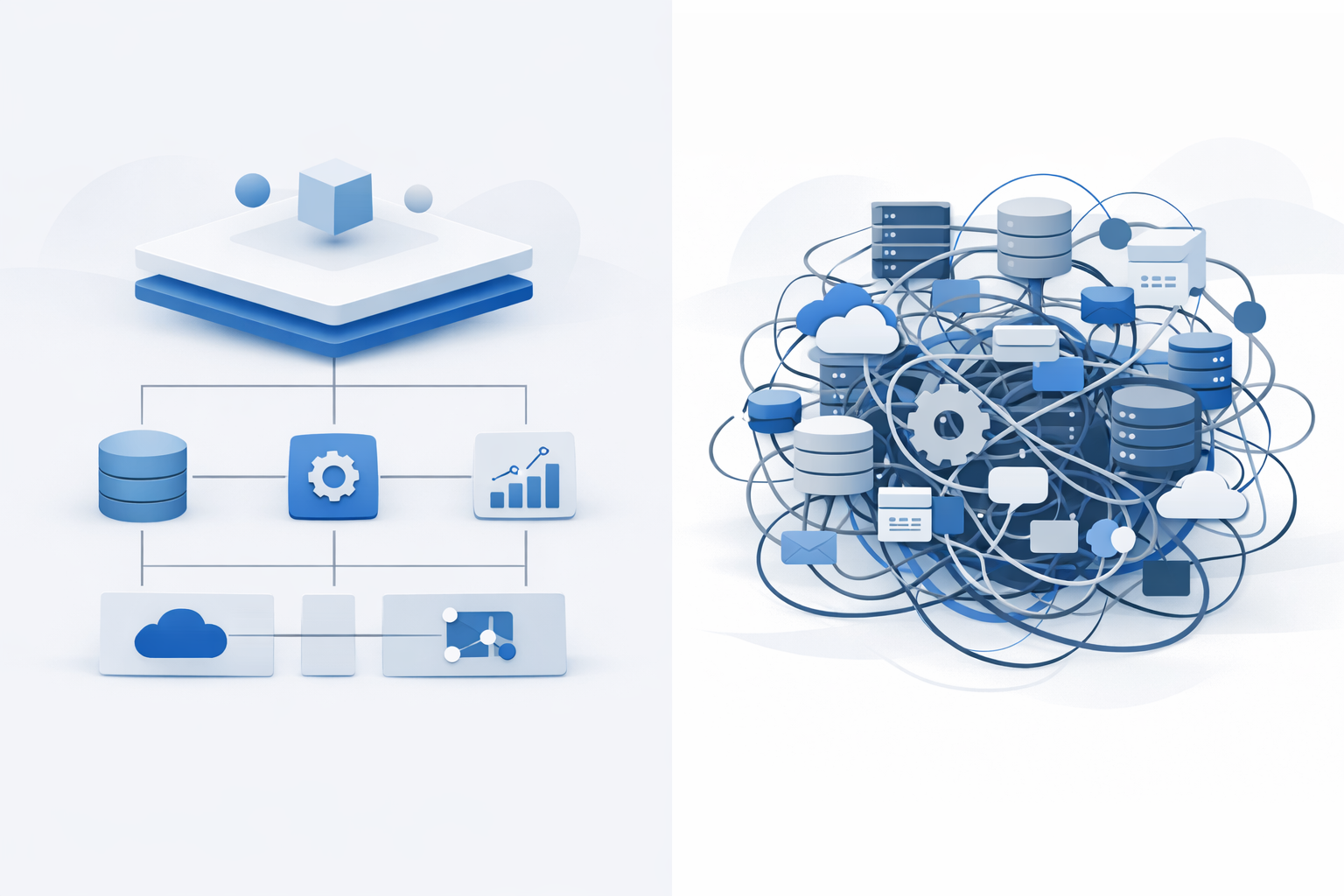

The reason is rarely the model itself. The real challenge is architecture.

LLMs place fundamentally different demands on systems than traditional software. Without an LLM-ready architecture, teams face unstable performance, rising costs, security risks, and features that cannot scale beyond limited usage.

What “LLM-Ready” Really Means

An LLM-ready architecture is not defined by:

- Which model do you use,

- How advanced your prompts are,

- Or whether you support chat.

An LLM-ready architecture is one that:

- integrates LLMs as replaceable components, not core dependencies,

- controls cost, latency, and failure modes,

- supports observability and governance,

- scales usage without linear cost growth,

- and evolves as models and use cases change.

In short, it treats LLMs as infrastructure capabilities, not experiments.

Why Most LLM Architectures Break in Production

Teams often start with a simple flow:

User → Prompt → LLM → Response

This works for demos, but breaks down quickly when:

- usage increases,

- workflows become complex,

- errors matter,

- or costs must be controlled.

Common failure points include:

- tight coupling between product logic and the LLM,

- no cost or latency visibility,

- lack of fallback or degradation strategies,

- poor handling of sensitive data,

- no way to test or audit behavior.

An LLM-ready architecture addresses these issues from the start.

Principle 1: Treat the LLM as an External Dependency

LLMs should be decoupled from core business logic.

This means:

- no hard-coded prompts in application logic,

- no direct calls scattered across the codebase,

- no assumptions that the model will always respond correctly.

Instead:

- Route all LLM interactions through a dedicated service or layer,

- Standardize inputs and outputs,

- Make the model replaceable without rewriting the system.

This allows teams to:

- switch models,

- adjust prompts,

- add safety layers,

- and control behavior centrally.

Principle 2: Design for Cost Awareness and Control

LLMs introduce variable and often opaque costs.

An LLM-ready architecture:

- tracks token usage per feature,

- ties cost to business outcomes,

- supports limits and budgets,

- avoids unbounded or recursive calls.

Without this, teams are often surprised by:

- runaway API costs,

- unpredictable monthly spend,

- features that are too expensive to keep enabled.

Cost observability is not an optimization – it is a requirement.

Principle 3: Separate Intelligence From Workflow

One of the biggest mistakes teams make is letting LLMs own entire workflows.

Instead:

- Workflows should be deterministic,

- LLMs should contribute intelligence at defined steps,

- Business rules should remain explicit.

For example:

- LLM suggests a classification,

- system validates it,

- workflow decides what happens next.

This separation:

- reduces risk,

- improves debuggability,

- and enables partial automation safely.

It is also the foundation for moving from generative AI to agentic AI later.

Principle 4: Build for Latency and Failure

LLMs are slower and less predictable than traditional services.

LLM-ready architectures:

- assume responses may be slow or unavailable,

- use async processing where possible,

- cache results when appropriate,

- degrade gracefully when models fail.

This is critical for:

- user-facing applications,

- high-volume systems,

- or time-sensitive workflows.

Users will tolerate reduced intelligence – but not broken experiences.

Principle 5: Data Boundaries and Security First

LLMs often touch sensitive data.

Architectures must:

- clearly define what data can be sent to models,

- sanitize and redact inputs,

- prevent prompt injection and leakage,

- log interactions safely,

- support audit requirements.

This is especially important for:

- regulated industries,

- internal tools with privileged access,

- customer-facing AI features.

LLM-ready does not mean “send everything to the model.”

Principle 6: Observability for LLM Behavior

Traditional observability focuses on:

- uptime,

- latency,

- errors.

LLM-ready systems also need visibility into:

- prompt versions,

- output quality,

- failure patterns,

- hallucination frequency,

- retry behavior.

Without this, teams cannot:

- debug issues,

- improve outputs,

- or justify AI investments.

LLMs must be observable systems, not black boxes.

Principle 7: Support Multiple AI Patterns

A mature LLM-ready architecture supports multiple patterns:

- simple prompt-based generation,

- Retrieval-Augmented Generation (RAG),

- tool-using agents,

- human-in-the-loop workflows.

Hardcoding one approach limits future evolution.

Flexible architectures allow teams to:

- start with RAG,

- layer in automation gradually,

- introduce agentic capabilities responsibly.

How LLM-Ready Architecture Supports Product Growth

From a business perspective, LLM-ready systems enable:

- faster iteration without rewrites,

- safer experimentation,

- predictable scaling,

- easier compliance,

- better ROI tracking.

From an engineering perspective, they:

- reduce technical debt,

- isolate risk,

- improve testability,

- and support long-term maintainability.

This alignment is what separates AI features from AI platforms.

Common Anti-Patterns to Avoid

- Embedding prompts directly in UI or backend logic

- Letting the LLM decide critical business actions alone

- Ignoring cost until usage spikes

- Treating LLM responses as deterministic

- Building one-off AI features without shared infrastructure

These shortcuts save time early, but cost far more later.

LLM-Ready Architecture and Scaling

As usage grows, architecture becomes the difference between:

- scaling usage,

- or turning features off due to cost or instability.

LLM-ready systems:

- throttle intelligently,

- prioritize high-value use cases,

- support gradual rollout,

- and keep human oversight where needed.

This makes AI adoption sustainable, not fragile.

How Rezolut Helps Teams Design LLM-Ready Systems

At Rezolut Infotech, LLM readiness is treated as a system design problem, not a tooling choice.

Rezolut helps teams:

- assess architectural readiness for LLM adoption,

- design decoupled AI layers,

- implement RAG and agentic patterns safely,

- introduce observability and cost controls,

- avoid hype-driven overengineering,

- and align AI systems with product and scaling strategy.

The goal is not to adopt LLMs faster – but to adopt them correctly.

A Simple LLM-Readiness Checklist

Before scaling LLM usage, ask:

- Can we swap models without major rewrites?

- Do we know the cost of each AI feature?

- Can the system function if the model fails?

- Are workflows deterministic?

- Is sensitive data protected?

- Can we observe and audit behavior?

If not, the architecture is not ready yet.

Conclusion

LLMs are powerful – but only when embedded in the right architecture.

Without deliberate design, LLM systems:

- become expensive,

- fragile,

- and hard to control.

With LLM-ready architecture, AI becomes:

- scalable,

- governable,

- and aligned with real business outcomes.

As AI capabilities evolve rapidly, architecture is the only stable advantage organizations can build.

Those who invest in LLM-ready foundations today will be able to adapt tomorrow, while others are forced into rewrites and rollbacks.